DataTechNotes

Decision tree is one of the well known and powerful supervised machine learning algorithms that can be used for classification and regression problems. The model is based on decision rules extracted from the training data. In regression problem, the model uses the value instead of class and mean squared error is used to for a decision accuracy.

Decision tree model is not good in generalization and sensitive to the changes in training data. A small change in a training dataset may effect the model predictive accuracy.

Scikit-learn API provides the DecisionTreeRegressor class to apply decision tree method for regression task.

In this tutorial, we’ll briefly learn how to fit and predict regression data by using the DecisionTreeRegressor class in Python. We’ll apply the model for a randomly generated regression data and Boston housing dataset to check the performance. The tutorial covers:

- Preparing the data

- Training the model

- Predicting and accuracy check

- Boston housing dataset prediction

- Source code listing

from sklearn.ensemble import DecisionTreeRegressor from sklearn.datasets import load_boston from sklearn.datasets import make_regression from sklearn.metrics import mean_squared_error from sklearn.model_selection import train_test_split from sklearn.preprocessing import scale import matplotlib.pyplot as plt from sklearn import set_config

First, we’ll generate random regression data with make_regression() function. The dataset contains 10 features and 5000 samples.

x, y = make_regression(n_samples=5000, n_features=10) print(x[0:2]) print(y[0:2]) [[ 1.773 2.534 0.693 -1.11 1.492 0.631 -0.577 0.085 -1.308 1.024] [ 1.953 -1.362 1.294 1.025 0.463 -0.485 -1.849 1.858 0.483 -0.52 ]]

[120.105 262.69 ] To improve the model accuracy we’ll scale both x and y data then, split them into train and test parts. Here, we’ll extract 10 percent of the samples as test data.

x = scale(x) y = scale(y)

xtrain, xtest, ytrain, ytest=train_test_split(x, y, test_size=0.10) Next, we’ll define the regressor model by using the DecisionTreeRegressor class. Here, we can use default parameters of the DecisionTreeRegressor class. The default values can be seen in below.

set_config(print_changed_only=False) dtr = DecisionTreeRegressor() print(dtr) DecisionTreeRegressor(ccp_alpha=0.0, criterion='mse', max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort='deprecated',

random_state=None, splitter='best') dtr.fit(xtrain, ytrain) score = dtr.score(xtrain, ytrain) print("R-squared:", score) R-squared: 0.9796146270086489 Now, we can predict the test data by using the trained model. We can check the accuracy of predicted data by using MSE and RMSE metrics.

ypred = dtr.predict(xtest) mse = mean_squared_error(ytest, ypred) print("MSE: ", mse) print("RMSE: ", mse**(1/2.0))

MSE: 0.130713987032462

RMSE: 0.065356993516231

x_ax = range(len(ytest)) plt.plot(x_ax, ytest, linewidth=1, label="original") plt.plot(x_ax, ypred, linewidth=1.1, label="predicted") plt.title("y-test and y-predicted data") plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.legend(loc='best',fancybox=True, shadow=True) plt.grid(True) plt.show() Running the above code provides a plot that shows the the original and predicted test data.

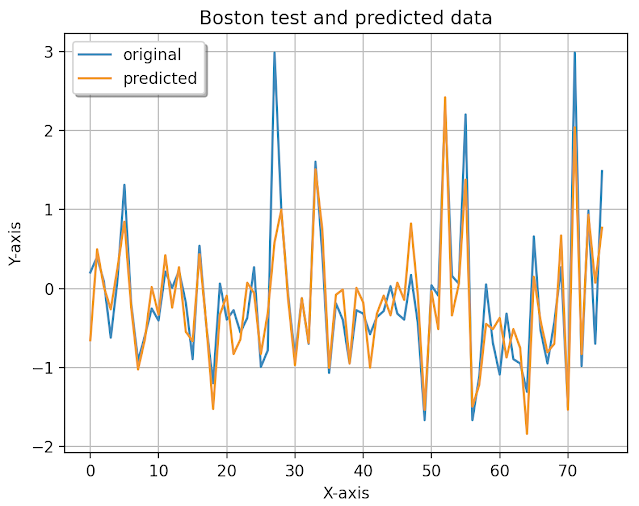

We’ll apply the same method we’ve learned above to the Boston housing price regression dataset. We’ll load it by using load_boston() function, scale and split into the train and test parts. Then, we’ll define model by changing some of the parameter values, check training accuracy, and predict test data.

print("Boston housing dataset prediction.") boston = load_boston() x, y = boston.data, boston.target x = scale(x) y = scale(y) xtrain, xtest, ytrain, ytest = train_test_split(x, y, test_size=.15) dtr = DecisionTreeRegressor() dtr.fit(xtrain, ytrain) score = dtr.score(xtrain, ytrain) print("R-squared:", score) ypred = dtr.predict(xtest) mse = mean_squared_error(ytest, ypred) print("MSE: ", mse) print("RMSE: ", mse**(1/2.0)) x_ax = range(len(ytest)) plt.plot(x_ax, ytest, label="original") plt.plot(x_ax, ypred, label="predicted") plt.title("Boston test and predicted data") plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.legend(loc='best',fancybox=True, shadow=True) plt.grid(True) plt.show() Boston housing dataset prediction.

R-squared: 0.9834125970221356

MSE: 0.2157465095558568

RMSE: 0.1078732547779284

In this tutorial, we’ve briefly learned how to fit and predict regression data by using Scikit-learn API’s DecisionTreeRegressor class in Python. The full source code is listed below.

from sklearn.tree import DecisionTreeRegressor from sklearn.datasets import load_boston from sklearn.datasets import make_regression from sklearn.metrics import mean_squared_error from sklearn.model_selection import train_test_split from sklearn.preprocessing import scale import matplotlib.pyplot as plt from sklearn import set_config x, y = make_regression(n_samples=5000, n_features=10) print(x[0:2]) print(y[0:2]) x = scale(x) y = scale(y) xtrain, xtest, ytrain, ytest = train_test_split(x, y, test_size=.10) set_config(print_changed_only=False) dtr = DecisionTreeRegressor() print(dtr) dtr.fit(xtrain, ytrain) score = dtr.score(xtrain, ytrain) print("R-squared:", score) ypred = dtr.predict(xtest) mse = mean_squared_error(ytest, ypred) print("MSE: ", mse) print("RMSE: ", mse**(1/2.0)) x_ax = range(len(ytest)) plt.plot(x_ax, ytest, linewidth=1, label="original") plt.plot(x_ax, ypred, linewidth=1.1, label="predicted") plt.title("y-test and y-predicted data") plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.legend(loc='best',fancybox=True, shadow=True) plt.grid(True) plt.show() print("Boston housing dataset prediction.") boston = load_boston() x, y = boston.data, boston.target x = scale(x) y = scale(y) xtrain, xtest, ytrain, ytest = train_test_split(x, y, test_size=.15) dtr = DecisionTreeRegressor() dtr.fit(xtrain, ytrain) score = dtr.score(xtrain, ytrain) print("R-squared:", score) ypred = dtr.predict(xtest) mse = mean_squared_error(ytest, ypred) print("MSE: ", mse) print("RMSE: ", mse**(1/2.0)) x_ax = range(len(ytest)) plt.plot(x_ax, ytest, label="original") plt.plot(x_ax, ypred, label="predicted") plt.title("Boston test and predicted data") plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.legend(loc='best',fancybox=True, shadow=True) plt.grid(True) plt.show()

Источник